SaaS-scale automation for big data

Support your digital transformations with a powerful platform for orchestrating data management tasks for large data sets.

Facilitate consistent big data processes

Automate complex processes end to end, incorporating all types of data and data sources, with an orchestration tool built to manage dependencies, standardize governance and keep your data safe.

-

Secure orchestration

Establish reliable, wide-scale automation of mission-critical processes, including ETL and metadata cataloging, while maintaining compliance and first-rate security. -

Broad integrations

RunMyJobs by Redwood simplifies data management across any remote system with out-of-the-box integrations and templates built on an extensible framework. -

Uniform data processing

Gain real control over your data pipelines — no matter how big or complex — with change management, real-time auditing and advanced analytics. -

Hybrid-ready architecture

Available as SaaS or self-hosted, RunMyJobs enables you to rapidly scale and innovate across your unique mix of on-premises and cloud systems with minimal overhead.

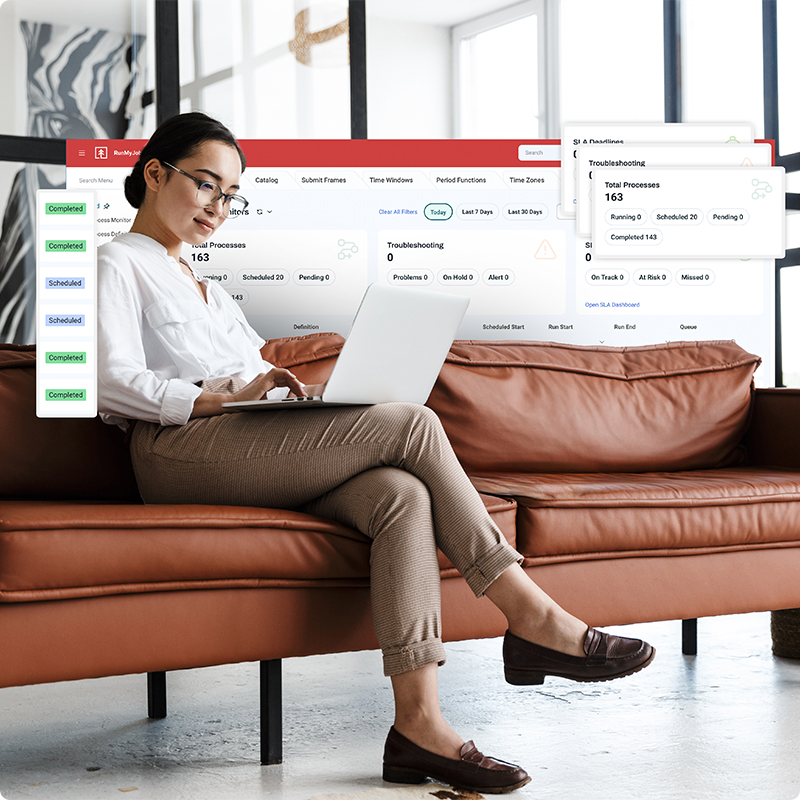

Achieve true scalability for big data use cases

The volume of enterprise data is growing every day, and you need big data technologies that can keep up.

As a SaaS-native automation platform with centralized workflow scheduling and low-code/no-code automation design, RunMyJobs can handle the scale of your real-time data processing, intelligent data integration and big data analytics needs.

Get visibility into big data processes across your ecosystem and apply predictive models organization-wide to drive better business decisions.

Extend automations across your infrastructure

Your data scientists and decision-makers should have access to the exact ingestion, transformation, storage and analysis tools they prefer.

Discover hundreds of built-in integrations and explore the RunMyJobs connector catalog to expand your data engineering capabilities with servers, cloud apps, business services and mainframes. Integrate seamlessly with big data tools like Apache Hadoop. New connectors and templates are added to the catalog regularly, so you get immediate access to the latest integrations and updates.

Ingest and process data streams from Internet of Things (IoT) devices, sensors, applications and more. Build your own integrations in minutes with the Connector Wizard, or use SOAP and REST APIs to connect to inbound, outbound and asynchronous web services.

Protect your data at every stage

Especially when you’re dealing with large amounts and various types of data, it’s essential to use automation solutions that help you comply with industry regulations and guarantee secure data exchange with trading partners.

Using RunMyJobs, you can rely on secure and cloud-ready communication for all channels using TLS encryption to connect to applications and services directly, hosting the secure gateway in your chosen environment. Redwood Software has extensive security certifications, including ISO 27001, ISAE 3402 Type II, SSAE 18 SOC 1 Type II and SOC 2 Type II.

Build your best-fit data infrastructure

Every company is becoming a big data company, but that doesn’t mean everyone manages big data the same way.

You can choose to use RunMyJobs as SaaS or to self-host. Because the platform scales across services and applications without agents, it’s easy to achieve a lightweight and flexible deployment.

Incur lower overhead and enjoy more flexibility with no server agents for application connections. Deploy a featherweight agent for direct system control.

SAP-compatible big data management software

Already an SAP user? Redwood solutions are the best choice for workload automation.

Redwood has a two-decade-long relationship with SAP and the most SAP certifications of any job scheduler. RunMyJobs supports clean core principles with pre-built SAP templates and connectors to integrate seamlessly with SAP ECC, SAP S/4HANA, SAP Datasphere and more.

Achieve autonomous execution of your data management tasks and scalable access to your mission-critical business data from your SAP and non-SAP systems.

Related resources

Read more about how to reap the benefits of RunMyJobs for data management and orchestrating big data processes.

Big data FAQs

What is big data?

Big data refers to extremely large and complex data sets that cannot be efficiently managed, processed or analyzed using traditional data management tools and methods. The term "big data" is often characterized by the "3 Vs:” Volume, Velocity and Variety.

Volume refers to the vast amount of data generated from different sources, such as social media, sensors, transactions and more.

Velocity addresses the speed at which new data is generated and the need to process it in near-real time to derive actionable insights.

Variety points to the different formats of data, such as structured (databases), semi-structured (XML, JSON) and unstructured data (text, video, audio).

Many organizations use cloud computing platforms to store and process big data while ensuring scalability and cost efficiency. Advanced technologies like artificial intelligence (AI) allow companies to extract more complex insights.

Big data is valuable because it allows organizations to analyze patterns, trends and associations, especially when working with data from multiple, diverse sources. Analyzing it can lead to better decision-making, enhanced customer experiences and operational efficiency.

Learn more about data movement maturity ad how to ensure your data is accessible.

What is orchestration in big data?

Orchestration in big data refers to the automated coordination and management of various processes involved in handling large-scale data workflows. These workflows typically involve data ingestion, data transformation, data analysis and data output. In the context of big data, orchestration ensures that these processes are executed in a logical sequence and at the right time, often across different platforms, tools and services.

Orchestration handles dependencies between tasks and manages their execution, ensuring that resources are optimally allocated and that the entire data pipeline runs smoothly. This is particularly important in big data environments, where multiple data processing tools (such as Apache Hadoop, Apache Spark and Kafka) need to work together to efficiently process large amounts of data.

For example, in a big data orchestration scenario, the system might automate the following:

- Ingest data from multiple sources (cloud storage, databases, APIs).

- Run data cleaning and transformation processes.

- Trigger machine learning models for analysis.

- Move processed data into data warehouses or dashboards for business intelligence.

Learn more about job orchestration and how the right tools help increase your team’s efficiency.

What is a big data pipeline?

A big data pipeline is a system that automates the flow of data from its source to its final destination, often passing through several stages of processing along the way. The stages in a big data pipeline typically include data ingestion, transformation, storage, analysis and visualization. A well-designed data pipeline ensures that data flows efficiently and reliably through each of these stages, regardless of the volume, velocity or variety of the data.

Key components of a big data pipeline include:

- Data ingestion: Collecting data from various sources, such as sensors, databases, APIs or cloud services

- Data processing: Transforming raw, unstructured or semi-structured data into a format that can be analyzed

- Data storage: Storing data in an appropriate storage system like a data lake (Hadoop, S3), a relational database or a NoSQL database

- Data analysis: Applying algorithms, models or queries to analyze the data and extract meaningful insights

- Data output/visualization: Sending the processed data to dashboards, reports or other business intelligence tools for end-user consumption

Learn more about data pipeline automation and how it helps enterprise companies stay competitive.

What is automation in data processing?

Automation in data processing refers to the use of software tools and scripts to automatically carry out tasks that were traditionally done manually. In the context of big data, automation can streamline tasks such as data ingestion, cleaning, transformation, analysis and reporting, significantly reducing the time and effort required for handling data.

Examples of automation in data processing include using workflow orchestration tools (such as Apache Airflow) to automatically trigger data pipelines, scheduling automated reports or leveraging machine learning models to process and analyze data in real-time. Automation is essential for modern data-driven organizations that need to process vast amounts of data quickly, accurately and consistently.

Read more to understand the power of data management automation.