Orchestrate your data pipelines with confidence

Improve visibility and control of end-to-end data management activities for better business outcomes.

Reliable pipeline automation

Your business runs on data, and RunMyJobs by Redwood enables you to harness its power by building efficient pipelines that encourage data-driven decision-making.

-

Streamlined control

Automate the scheduling, execution and monitoring of data orchestration tasks to deliver reliable and timely end-to-end data pipeline operations and meet your business objectives.

-

Assured success

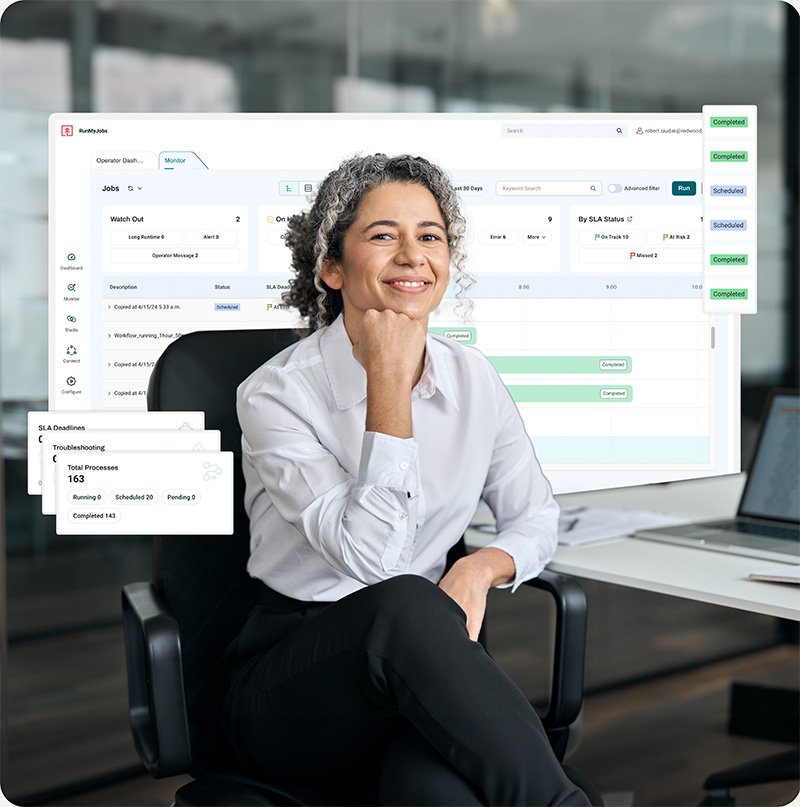

Govern and monitor your organization’s data management workflows in real time with powerful workflow control logic that supports problem management and predictive SLA reporting.

-

Broad visibility

Bring disparate and siloed data management tasks into a single workflow view for comprehensive visibility, consistency and compliance across any data pipeline.

-

Extensible integration framework

Leverage out-of-the-box connectors and easily build new, reusable integrations with the RunMyJobs Connector Wizard.

-

Scalable modern SaaS platform

Experience a cloud-native SaaS platform built for scale and connectivity across any technology stack.

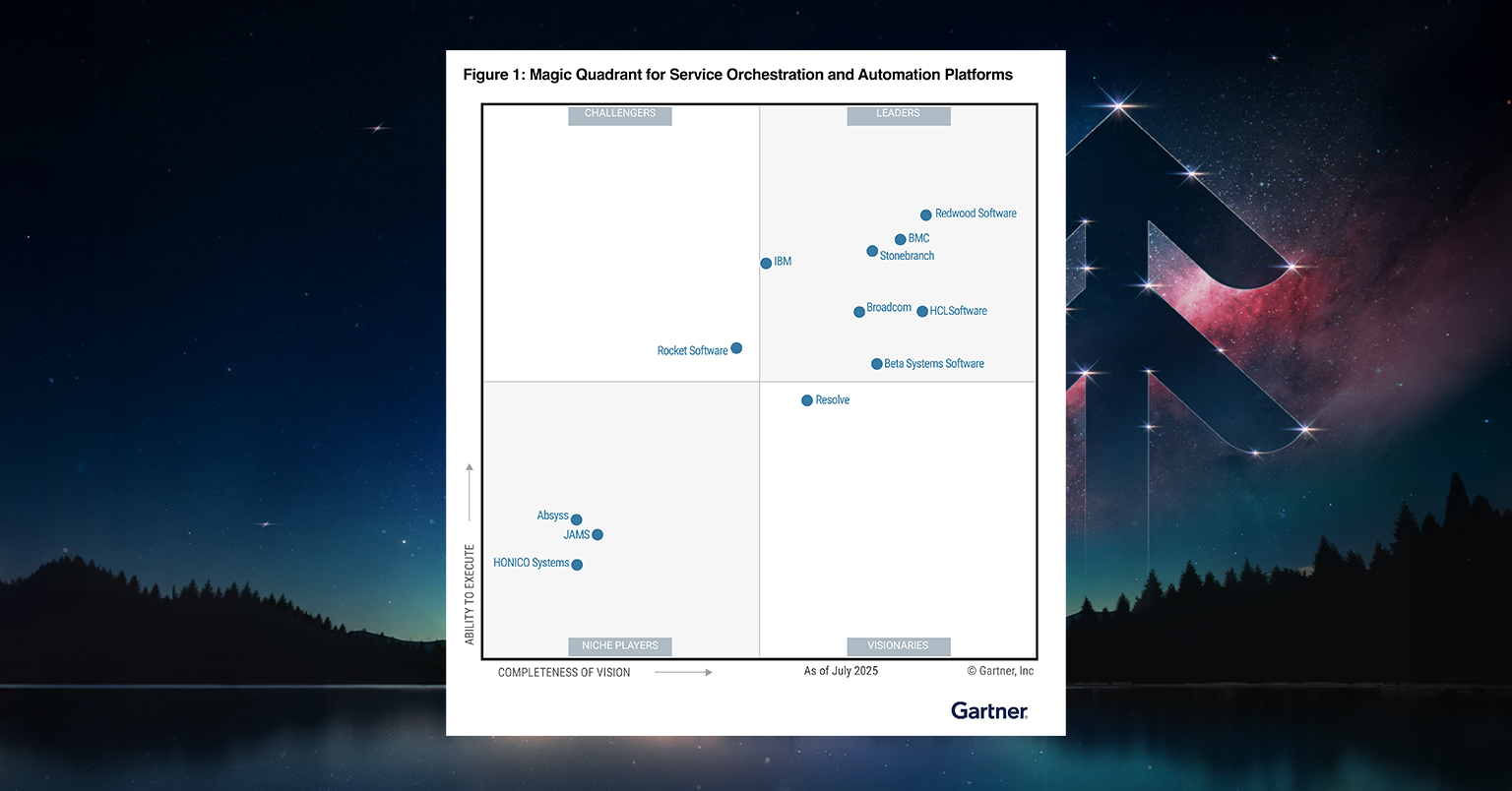

Gartner® 2025 Magic Quadrant™ for SOAP

Gartner has named Redwood a Leader, positioned furthest in Completeness of Vision and highest for Ability to Execute, in its Magic Quadrant™ for Service Orchestration and Automation Platforms (SOAPs).

Click below to get your copy of the full analyst report.

Why prioritize data orchestration?

Data orchestration use cases are varied but generally involve collecting, storing, organizing and analyzing diverse and disconnected datasets to meet the needs of your business and stakeholders.

Data management activities include:

- Ingesting data to move or copy it from one system to another for further processing

- Transforming data by manipulating data types or merging datasets

- Processing data to gain insights or make it usable in other processes

There are additional requirements for data security, archival and other components of a data management strategy.

All of the above activities take place in complex and diverse environments, including SaaS and hybrid systems with differing skill set and process management requirements.

Monitoring and managing activities in a common framework in real time minimizes or eliminates human intervention, increases efficiency and

Unify diverse systems in a single data pipeline

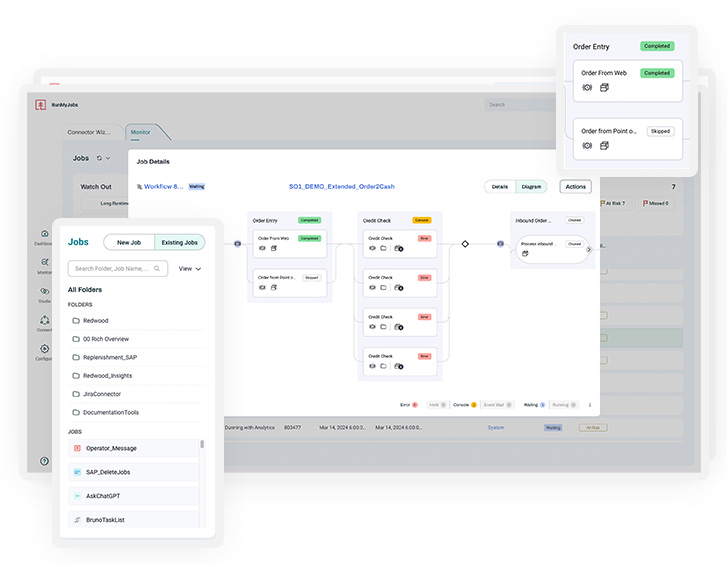

RunMyJobs integrates with a wide range of technologies that interact in critical stages of business data pipelines.

Integrating directly with ERP, CRM and financial tools as well as databases and big data platforms, RunMyJobs allows you to easily build processes using data management tools, such as extract, transform and load (ETL) and business intelligence (BI) solutions.

Experience the ease and accessibility of out-of-the-box integrations for:

- Data storage and processing

- Data integration and transformation

- Data warehousing and analytics

- Business solutions

Or, integrate and interact via APIs by building your own connectors using the Connector Wizard.

Compose resilient workflows for end-to-end data orchestration

Data management workflows can involve complex and strict dependencies, which can severely impact the success of the entire data pipeline.

With RunMyJobs, you can configure workflows to adapt and respond to the output and status of individual tasks and remote system statuses. These trigger additional logic and actions that ensure the success of your end-to-end workflow.

Powerful command and control mechanisms build error-checking, problem resolution and alerting into the workflows themselves.

RunMyJobs is built for scale, making it easy to create and use standardized components across all your workflows, including specific configuration items and even entire workflows.

Deliver accurate data insights on time

Most data orchestration processes are time-critical. Especially if you need to integrate and analyze data to drive business processes based on sales and customer data, you must ensure data pipelines complete successfully and on time.

RunMyJobs makes it simple to identify risks to your workflows and how to remediate problems. Get early warnings if critical deadlines are predicted to slip so you can address them before they impact the business.

Configure SLAs and thresholds with customizable escalations and alerts to ensure the right people are informed at the right time with the right information.

Easily integrate with all your data orchestration tools

Get instant access to integrations and features in the RunMyJobs Connector Catalog, ensuring you always know about the latest developments.

Data management and orchestration FAQs

What is the difference between data orchestration and ETL?

Data orchestration and extract, transform, load (ETL) refer to managing and processing data, but they serve distinct purposes. ETL tends to be for batch processes and involves extracting data from various sources, transforming it into a destination-specific structure and format and loading it into a data warehouse, data lake or other storage system for analysis.

Data orchestration coordinates complex data workflows to ensure various data processes run smoothly across an organization. It involves managing dependencies, scheduling tasks and integrating various data sources and tools, including ETL processes.

Using data and workflow orchestration tools like RunMyJobs, data teams can standardize data governance and automate the scheduling, execution and monitoring of data orchestration tasks to deliver timely end-to-end data pipeline operations.

Read more about the ETL automation process and how RunMyJobs helps improve data quality and enhance the scalability of data pipelines.

What is orchestration of data pipelines?

Orchestration of data pipelines involves managing and automating data flow between various systems and processes. It ensures that data is collected, processed and delivered efficiently across different stages of the data lifecycle. This includes scheduling tasks, managing dependencies and handling failures to ensure smooth data workflows.

Data orchestration platforms automate processes and allow data teams to define, schedule and monitor complex data workflows. These activities ensure that data from multiple sources is processed and available for analytics and business intelligence. These platforms optimize resources, reduce bottlenecks and maintain data quality throughout the pipeline.

Learn about jobs-as-code (JaC) and how it breaks down silos and enables seamless communication and coordination between different automation tasks, including data pipelines.

What is data flow orchestration?

Data flow orchestration involves coordinating and automating the movement and processing of data across different systems and workflows. It ensures that data tasks are executed in the correct sequence, managing dependencies and handling any errors that occur during the process. Done properly, orchestration maintains data integrity and efficiency in complex data ecosystems.

A key part of the modern data stack, tools used for data flow orchestration allow organizations to define Directed Acyclic

Graphs (DAGs) that outline the data workflows, schedule their execution and monitor their performance. These platforms support integration with various data sources and technologies to enable real-time data processing and optimization across the data lifecycle.

Read about the evolution of process automation and how different automation tools can help with data management and orchestrating data flow.

What is data orchestration?

Data orchestration is the automated coordination and management of data processes and workflows. It involves scheduling, monitoring and ensuring the proper execution of data tasks across various systems. Data orchestration aims to streamline data collection, processing and delivery for efficient and accurate data analysis and reporting.

Data orchestration platforms manage complex data workflows by defining and executing tasks in a predefined order. They support integration with diverse data sources and tools, facilitating real-time data processing and ensuring data quality. They are essential for managing data silos, optimizing data pipelines and supporting advanced analytics and machine learning initiatives in cloud-based and on-premises environments.

Learn more about how data orchestration fits into service orchestration and automation platforms — and why it matters.