From data chaos to data clarity

We talk about data management a lot here at Redwood Software. Regardless of industry or automation use cases, this topic comes into play — even when it doesn’t sound like it.

We might be discussing aggregating long-term sales figures or reconciling stock and inventory with customers, but those are ways of talking about the business rules that we apply on top of data management processes.

In many organizations, the departments that define those rules own some of the process of moving or manipulating data. Other teams collect their data — the raw sources — and use it for their distinct purposes. Centralized teams work to manage sources of truth in major business apps or master data repositories, and a myriad of data sources exist at the edge. Point-of-sale (POS), remote office and legacy systems add to the potential chaos of separate but interconnected flows of data.

Although some teams may feel their part of the flow is under control, others are re-running processes, modifying data manually to allow other processes to work or resorting to collecting raw data to reach their goals.

As the complexity of data increases, the reality of not having complete control over the data pipelines that run the modern business becomes more and more of a threat.

Data: “The new oil”

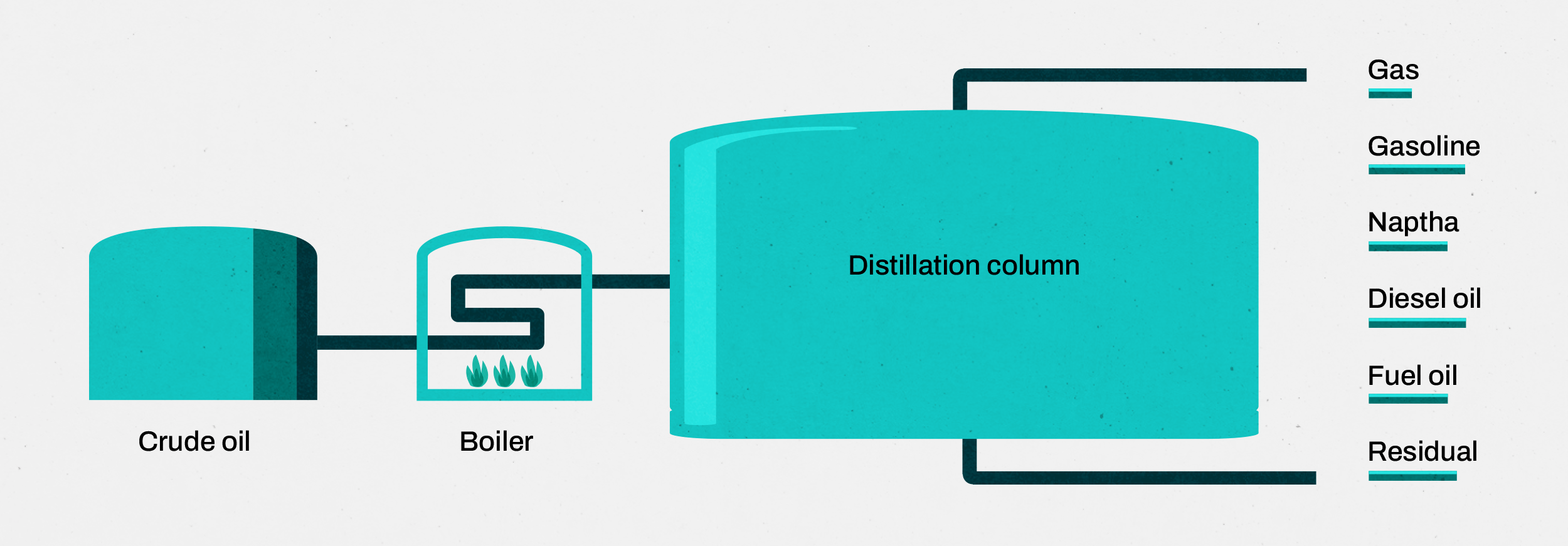

We all agree: Data is one of the most precious resources for today’s enterprise. It drives new industry as oil once did. We can extend this analogy to data management — it’s the critical refinement process, without which data is largely worthless.

This analogy is useful if we consider an oil pipeline diagram. With the extraction, refinement, transportation and consumption of those refined products, there are many parallels with data management pipelines.

While the oil pipeline starts with the flow of mostly one type of thing, data pipelines start fragmented, coming from tens, hundreds or even thousands of different sources. Data, therefore, presents an exponentially larger, more complex problem than the pre-treatment of crude oil before it enters the refinement process.

A critical part is missing in our pipeline analogy that affects everything else: the creation of the data. Data pipelines start with business activities, interactions with customers, apps, points of sale, Internet of Things (IoT) and more.

Old-school posters may have shown the natural processes that created the oil, but unlike the geological speed of those processes, our valuable resource is being written, re-written and consumed in seconds, and the inputs to our process are chaotic, unruly and spread out.

Blockages in data pipelines

A data pipeline is a complex web of data sources, logistics and analytics processes that underpin business operations.

This web may look like it was made by a drunk spider in some organizations, characterized by fragmented and siloed data sets and solutions. If this sounds familiar, you’ll likely perceive data management as laborious and error-prone.

Without a cohesive automation strategy, many IT and data management teams encounter the following struggles.

- Waiting for other teams to do their part of the process. This delays downstream activities, especially if different teams have different ways of sharing data and information and managing the scheduling of tasks.

- Vendors or departments changing data formats. You may need to reconfigure multiple scripts, fields or tools that impact many tasks.

- New technology requiring a new method or skill. Data management tasks may rely on technology that uses a different protocol or standard than what your business has used thus far.

- Managing multiple automation tools with narrow use cases. If many business systems are using their own schedulers and automation services, tool sprawl can be a major time sink for your IT team.

- Teams manually unpicking changes to data sets. This makes it cumbersome to run the same process or script again in the next data management step.

All these issues affect the quality, compliance and timeliness of data used in decision-making.

Drilling for data

Distilling data into actionable insights that drive business operations and decisions also reflects the same basic steps that we see in the oil pipeline analogy.

Extraction

Collecting data from all sources at the right time and coordinating its delivery downstream to the next stage is no small feat. There are many tools for collecting data, and some teams use bespoke scripts and niche solutions.

Refinement

Once extracted, the data is manipulated and analyzed to make it more useful for different processes and tasks. New data sets may be created with different values, conversions of data formats or standards and different types of analysis to perform calculations and correlations.

Transportation

Lastly, data is loaded into destination systems and delivered to the end consumer or used in other processes.

The above stages align nicely with a commonly discussed data management process: Extract, Transform, Load (ETL).

Organized chaos

To move from data chaos to data clarity, it’s vital to understand the difference between data management techniques and data management processes.

We’ve been using some terms associated with data management so far, such as ETL. Using that and two other relevant examples, this table explains where they fit into the data management function and the oil analogy that’s been serving us so well.

| Component | Definition | Context | In an oil pipeline |

| Extract, Transform, Load (ETL) | A three-stage process of consolidating data from multiple sources into a single, coherent data store, typically a data warehouse or data lake | ETL is primarily a set of techniques used in data integration. | The technical processes by which the oil is collected and refined, for which most companies largely use the same method but with their own nuances |

| Master Data Management (MDM) | A foundational layer of various business processes that provides a unified, accurate view of critical data entities like customers, products and suppliers across an organization | MDM is more than just a process; it’s a comprehensive discipline that involves policies, governance, procedures and technological frameworks aimed at managing critical data entities consistently across an enterprise. | The properties, names and types of materials used in the process and the standards, names and types of products that are produced (e.g., Premium 91–94 octane fuel) |

| Backup and Recovery | An IT-centric process focused on data protection and disaster recovery | This is a critical IT process involving specific operations to copy and store data and restore it when necessary. | A sub-process, perhaps one that deals with the disposal of waste or long-term storage of raw materials |

How data sources impact the pipeline

To further bring order to the fragmented chaos we often see in data management, we need to also look at sources of data and understand how they play a part in the whole.

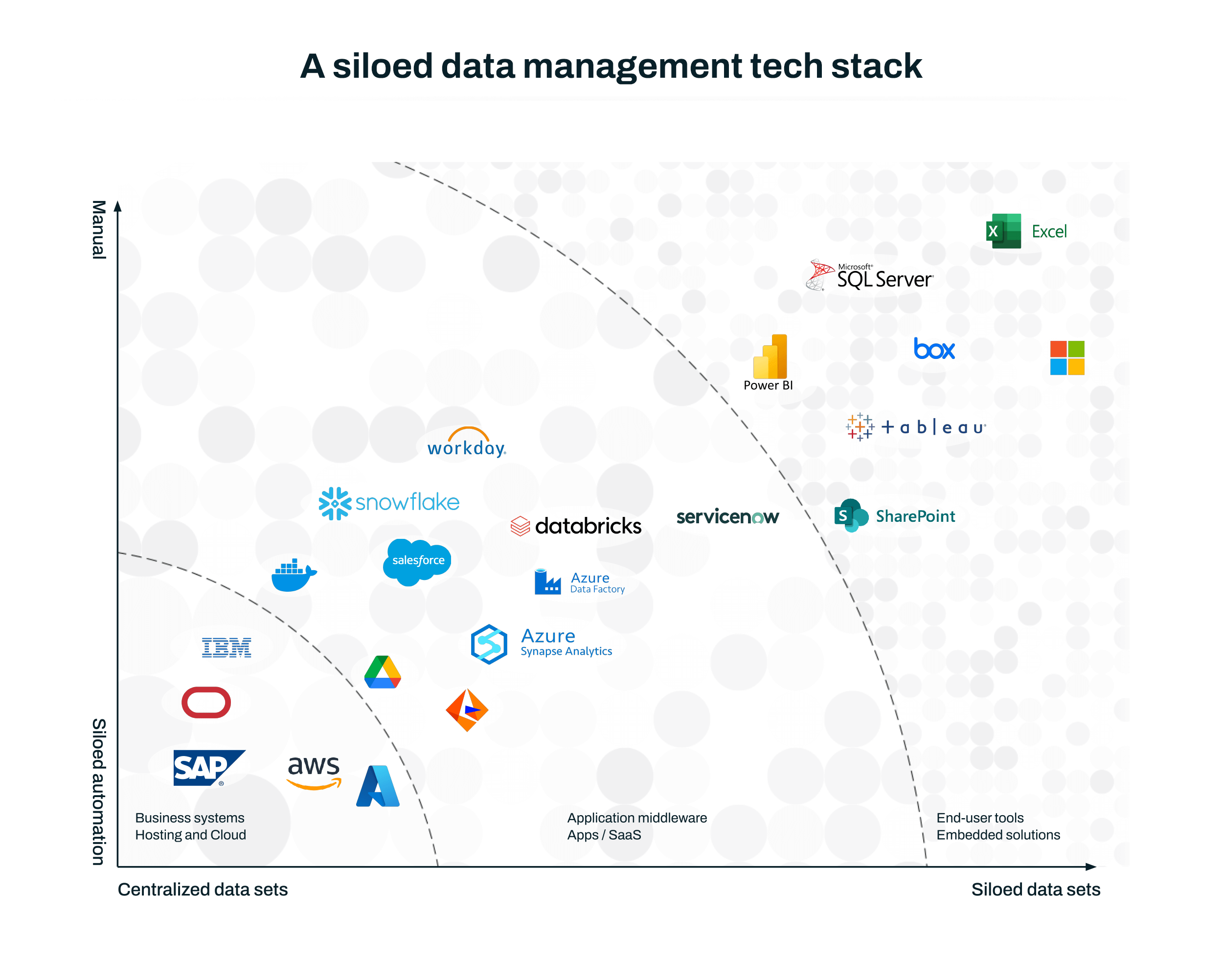

We can categorize data sources in many ways. The diagram below looks at each system’s significance to business processes — versus how close to end-users, customers and the edge of the business system architecture it is.

At the bottom left, we see data stores and hosting for customer-facing apps and back-end processes.

These systems likely have built-in automation capabilities, but they’re either narrow in focus or shallow in capability to integrate with other systems.

The right automation solutions can govern and manage automation in this section, ensuring tasks take place at the right time and dependencies are managed.

In the middle, we have middleware, pure data storage and analytics.

These systems sometimes have a limited set of automation capabilities. They integrate well with the systems on the bottom left but may struggle with the complex data sets coming in from other systems towards the top right.

At the top right, you’ll find end-user tools and productivity apps.

Often a data destination and a data source, end-user control creates problems for data integrity and availability.

And a special mention: embedded solutions.

These are the out-in-the-wild systems, such as IoT and POS. They’re often legacy systems that can be specific and problematic to deal with, especially in the event of a failure. Data sources are often spread out with data sets per device or location.

Data tends to flow from embedded solutions and business apps into middleware and business systems before being sent back to business apps, end-user tools and reporting apps.

Springing a leak

With parts of the processes spread out across tools, governed by one system or team, gaps and cracks start to appear. In those cracks, data management processes unravel in frustrating ways.

A robust automation strategy allows us to design seamlessly integrated, efficient data management processes. If errors do occur, you can build in logic and pre-configure reactions to problems, allowing your data pipeline to progress and repair without laborious unpicking and troubleshooting.

Workload automation’s broad capabilities to automate every stage of the pipeline means all the disconnected activities can be joined up — the end of one step flows immediately into the start of another with maximum efficiency. In the event that errors occur, you can build in logic and pre-configure reactions to problems that allow the data pipeline to progress and repair without laborious unpicking and troubleshooting.

By reliably automating business-as-usual processes, your team can take on higher-value and more interesting tasks instead of just keeping the lights on. With end-to-end automation, it’s common to experience increased velocity, more reliable input for key decisions and peace of mind around data compliance.

Discover how RunMyJobs by Redwood can bring clarity to your data management processes: Book a demo.

About The Author

Dan Pitman

Dan Pitman is a Senior Product Marketing Manager for RunMyJobs by Redwood. His 25-year technology career has spanned roles in development, service delivery, enterprise architecture and data center and cloud management. Today, Dan focuses his expertise and experience on enabling Redwood’s teams and customers to understand how organizations can get the most from their technology investments.